SKILLS: Fabrication | Illustrator | Experience Design | Arduino | Hardware Prototyping | Rasberry Pi

SUMMARY: PopPop is a new type of living pedestrian signal that interacts with a street corner. Are there too many jaywalkers? How crowded is the corner? Is it rainy? PopPop knows and reacts accordingly. Temporarily located in NYC, at Broadway & Waverly

Project Background: PopPop was part of a special Microsoft Research Fuse Labs project setup specifically to explore sentient data and the idea of how can you add sentiment/emotions to data. With more and more objects becoming connected devices (the “Internet of Things”), we felt it was important to explore the relationships that can come from these objects, and how they may make our lives more interesting and fun. We also wanted to explore how personification can change people’s relationship to an object.

Team and your role: Alexandra Coym, Sam Slover, and Steve Cordova, all Master’s students in tech and design at NYU’s Interactive Telecommunications Program.

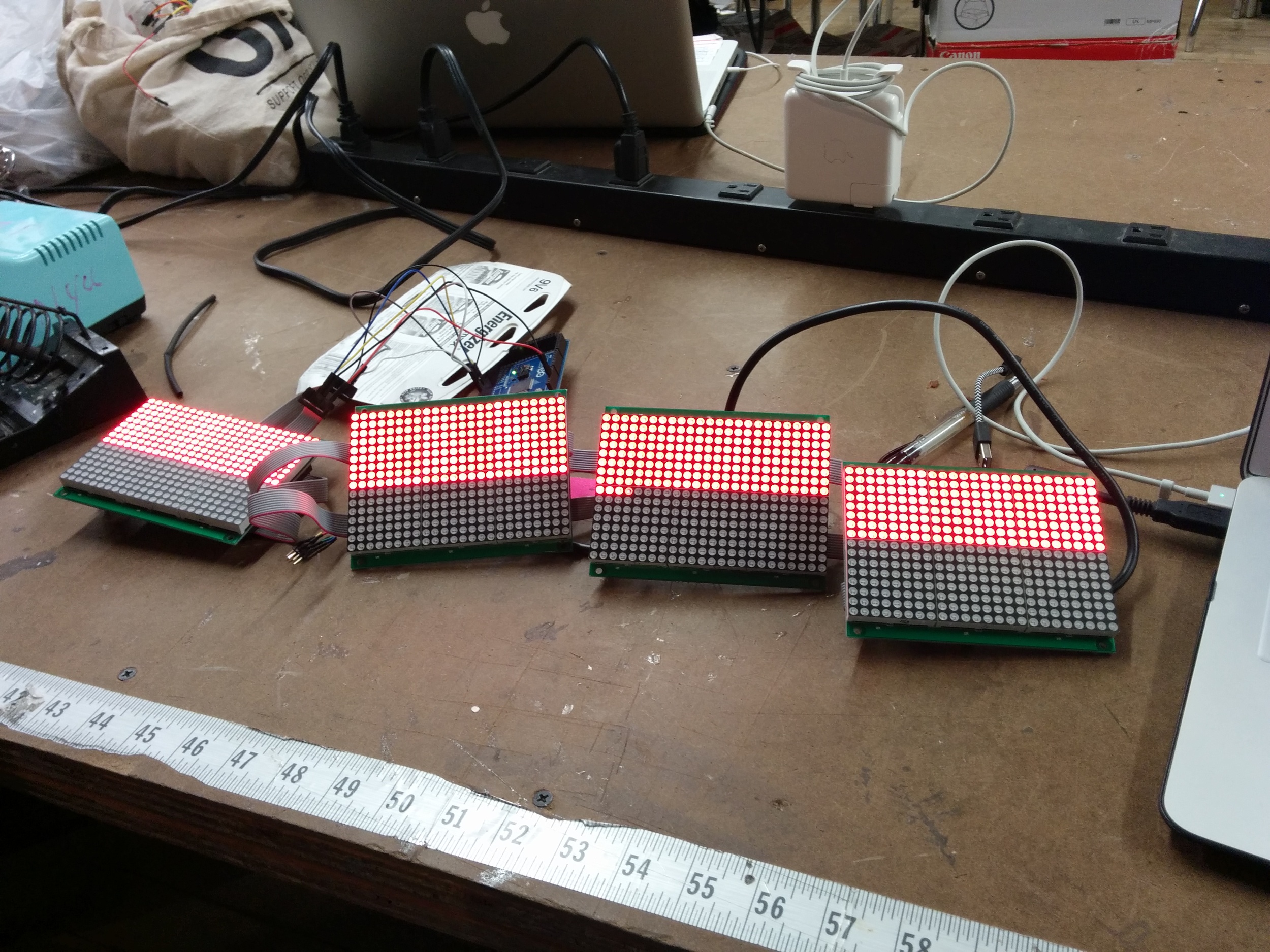

The Work: For the physical build of the pedestrian signal, we constructed custom LED matrix panels (each powered and updated by an Arduino Yun). One panel depicts Pop Pop’s current emotional state and the other panel shows the corresponding text that is communicated to the passer-by.

The build of the signal panels consists of pine, masonite and black plexiglass to make it as similar as possible to a real pedestrian signal.

The Web system is a node.js app that collects real-time data through Mechanical Turk and several hyper-local APIs. Every few minutes, the app pings the Mechanical Turk community to watch a live feed, from which it gets back quantitative data on what they see happening at the intersection (but the camera is set up so faces cannot be detected). The app then combines this data with other APIs (weather, traffic, crime), and does sentient analysis to come up with a current emotion. This can be anything from upbeat to happy to a bit down, and more.

When a new emotion is computed, it is automatically updated on both the physical pedestrian signal (via the Web-enabled Arduino Yuns) and on the website’s front-end (via Web sockets).

Success Metrics: We started this project with a proposal that stated we wanted to personify neighborhoods in a way that is directly correlated to the feelings of the people in that neighborhood. We spent a lot of time thinking about which physical output would display this best, and while discussing the readings, came to the conclusion that we wanted to animate a non-human physical object. Particularly the documentation of MIT’s Blendie and the NYTimes article stuck with us: “(…) given the right behavioral cues, humans will form relationships with just about anything - regardless of what it looks like. Even a stick can trigger our social promiscuity.” This was something we wanted to explore further.

We quickly realized that the scope and the data sets we had been considering (foursquare, facial expressions, etc.) would be so subjective and broad that is would be too disjointed from whatever physical object we wanted to breathe life into. Even within neighborhoods there can be greatly varying emotional states just based on the crossing or block you happen to be on. We want people to relate with their neighborhood, to really connect with it, so we felt we had to be even more site specific.

Through our research, we've discovered that by making a traditional object sentient and giving it human voice and personality, we can more effectively prompt people to perform actions that will make the object happier. This is even more so the case when the user sees the connected object every day and starts noticing that its personality and mood changes based on how safe people are being on the street. We have seen through user testing that people WANT to make their intersection's sentient pedestrian signal happy by being safer on the streets, and that by changing the traditional pedestrian signals to have a broader human voice and personality, people are much more likely to look up, heed its suggestions, and engage with it.

On a broader level, while most of the conversations around connected devices have centered on utilitarian purposes (for example, the quintessential refrigerator that knows when you’re out of milk and orders more), we believe that connected objects can be used in cities to brighten life and help people be safer.

What You Would Have Done Differently: The prototype is using human intelligence, but we’d like to be using machine learning, computer vision and sensors to automate the process for scaling purposes. The idea of animate control systems in cities, giving them human character, is important. It’s known as affective computing, giving human characteristics to machines. Connecting to the mood, gives you an affective bump and in turn creates positive interactions. People respond to a smile. We would have connected our devices to multiple locations to get more varied responses to the efficacy of the device.

Further documentation can be found on our project blog and the project website.